Is your company maintaining web servers on a daily basis? If the answer is yes, keep reading.

Not too long ago, back in 2010 the company Docker Inc. was launched by a team of professionals in order to create an open-source project that automates the deployment of code inside software containers. The official product was announced to the wide public in 2013 and has both free and premium tiers.

What exactly is Docker though?

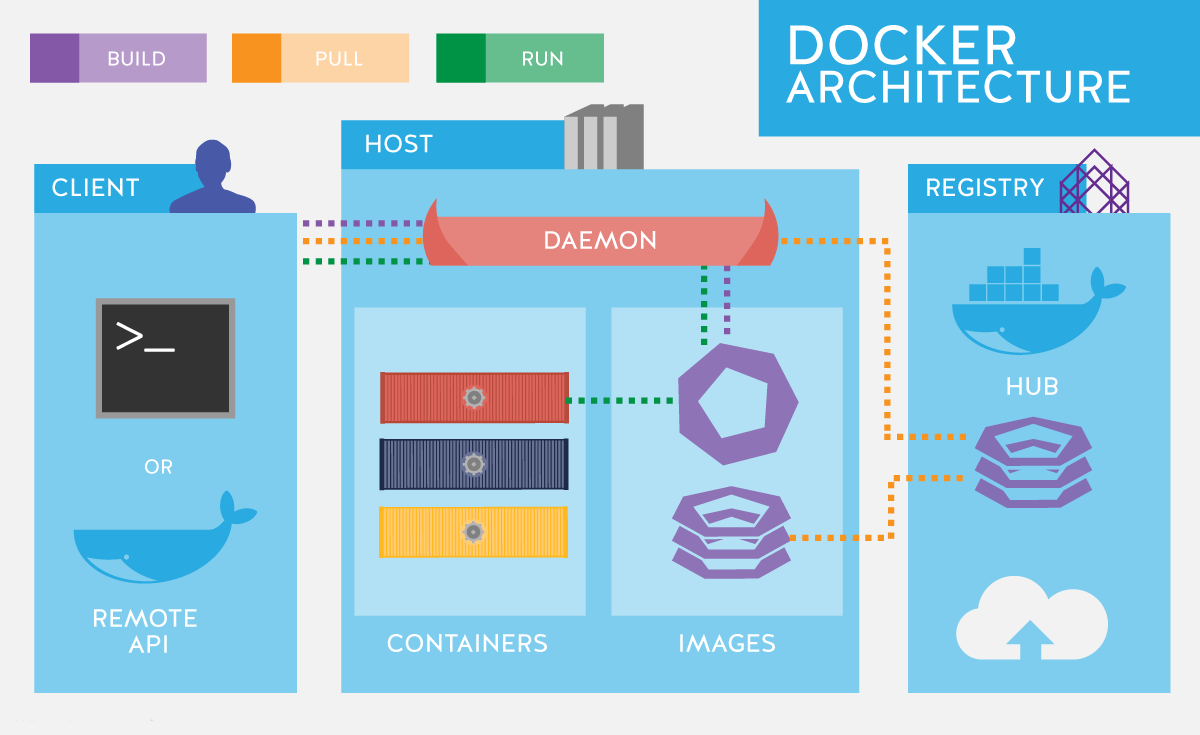

To simply say it, it’s a set of coupled software-as-a-service (SaaS) and platform-as-a-service (PaaS) products that use operating-system-level virtualization to develop and deliver software in packages called containers.

Docker container technology was launched in 2013 as an open-source Docker Engine. It leveraged existing computing concepts around containers and specifically in the Linux world, primitives known as cgroups and namespaces. Docker's technology is unique because it focuses on the requirements of developers and systems operators to separate application dependencies from infrastructure.

In other words, the container is another form of virtualization. Virtual machines that we all know allow a piece of hardware to host multiple OS as software. Added to host machines they let the hardware power to be shared among different users and appear as separate machines or services. Containers go for the next level as they virtualize the OS splitting it into virtualized compartments to run container applications.

What is a container actually?

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries, and settings.

Available for both Linux and Windows-based applications, containerized software will always run the same, regardless of the infrastructure. Containers isolate software from its environment and ensure that it works uniformly despite differences for instance between development and staging.

Why we prefer to use Docker?

These containers use shared OS as we pointed already. Operating like this they are more efficient, just because instead of virtualizing hardware, containers rest on top of a single Linux instance, and use everything in full percentage for your processes.

Docker also enables developers to easily pack, ship, and run any application as a lightweight, portable, self-sufficient container, which can run virtually anywhere. Every developer can isolate code into a single container and in this way can modify and update the program more easily.

Shortly said, Docker allows more applications to run on the same hardware at the same time, developers work better and faster with it and most of all it makes managing and deploying applications much easier. Looking at it, how can we not use it?

Share on social media